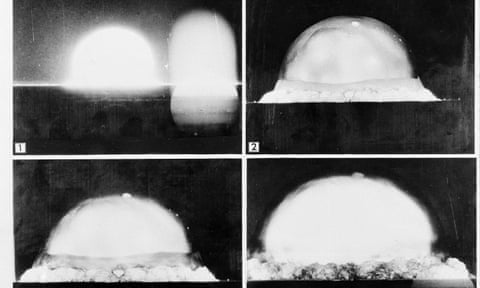

Images of the first atomic bomb test in 1945 in New Mexico. Photograph: AP –

A new essay on the rise of superintelligent machines pivots from being a warning to humanity to a rallying cry for an industrial complex to bolster American military defence

–

Last modified on 2024 Jun 15

–

Ten years ago, the Oxford philosopher Nick Bostrom published Superintelligence, a book exploring how superintelligent machines could be created and what the implications of such technology might be. One was that such a machine, if it were created, would be difficult to control and might even take over the world in order to achieve its goals (which in Bostrom’s celebrated thought experiment was to make paperclips).

The book was a big seller, triggering lively debates but also attracting a good deal of disagreement. Critics complained that it was based on a simplistic view of “intelligence”, that it overestimated the likelihood of superintelligent machines emerging any time soon and that it failed to suggest credible solutions for the problems that it had raised. But it had the great merit of making people think about a possibility that had hitherto been confined to the remoter fringes of academia and sci-fi.

Now, 10 years later, comes another shot at the same target. This time, though, it’s not a book but a substantial (165-page) essay with the title Situational Awareness: The Decade Ahead. The author is a young German lad, Leopold Aschenbrenner, who now lives in San Francisco and hangs out with the more cerebral fringe of the Silicon Valley crowd. On paper, he sounds a bit like a wunderkind in the Sam Bankman-Fried mould – a maths whiz who graduated from an elite US university in his teens, spent some time in Oxford with the Future of Humanity Institute crowd and worked for OpenAI’s “superalignment” team (now disbanded), before starting an investment company focused on AGI (artificial general intelligence) with funding from the Collison brothers, Patrick and John – founders of Stripe – a pair of sharp cookies who don’t back losers.

So this Aschenbrenner is smart, but he also has skin in the game. The second point may be relevant because essentially the thrust of his mega-essay is that superintelligence is coming (with AGI as a stepping-stone) and the world is not ready for it.

The essay has five sections. The first charts the path from GPT-4 (where we are now) to AGI (which he thinks might arrive as early as 2027). The second traces the hypothetical path from AGI to real superintelligence. The third discusses four “challenges” that superintelligent machines will pose for the world. Section four outlines what he calls the “project” that’s needed to manage a world equipped with (dominated by?) superintelligent machines. Section five is Aschenbrenner’s message to humanity in the form of three “tenets” of “AGI realism”.

In his view of how AI will progress in the near-term future, Aschenbrenner is basically an optimistic determinist, in the sense that he extrapolates the recent past on the assumption that trends continue. He cannot see an upward-sloping graph without extending it. He grades LLMs (large language models) by capability. Thus GPT-2 was “preschooler” level; GPT-3 was “elementary schooler”; GPT-4 is “smart high schooler” and a massive increase in computing power will apparently get us by 2028 to “models as smart as PhDs or experts that can work besides us as co-workers”. En passant, why do AI boosters always regard holders of doctorates as the epitome of human perfection?

After 2028 comes the really big leap: from AGI to superintelligence. In Aschenbrenner’s universe, AI doesn’t stop at human-level capability. “Hundreds of millions of AGIs could automate AI research, compressing a decade of algorithmic progress into one year. We would rapidly go from human-level to vastly superhuman AI systems. The power – and the peril – of superintelligence would be dramatic.”

The essay’s third section contains an exploration of what such a world may be like by focusing on four aspects of it: the unimaginable (and environmentally disastrous) computation requirements needed to run it; the difficulties of maintaining AI laboratory security in such a world; the problem of aligning machines with human purposes (difficult but not impossible, Aschenbrenner thinks); and the military consequences of a world of superintelligent machines.

It’s only when he gets to the fourth of these topics that Aschenbrenner’s analysis really starts to come apart at the themes. Running through his thinking, like the message in a stick of Blackpool rock, is the analogy of nuclear weapons. He views the US as being at the stage with AI it was after J Robert Oppenheimer’s initial Trinity test in New Mexico – ahead of the USSR, but not for long. And in this metaphor, of course, China plays the role of the Soviet empire.

Suddenly, superintelligence has morphed from being a problem for humanity into an urgent matter of US national security. “The US has a lead,” he writes. “We just have to keep it. And we’re screwing that up right now. Most of all, we must rapidly and radically lock down the AI labs, before we leak key AGI breakthroughs in the next 12-24 months … We must build the computer clusters in the US, not in dictatorships that offer money. And yes, American AI labs have a duty to work with the intelligence community and the military. America’s lead on AGI won’t secure peace and freedom by just building the best AI girlfriend apps. It’s not pretty – but we must build AI for American defence.”

All that’s needed is a new Manhattan Project. And an AGI-industrial complex.

–

What I’ve been reading

Despot shot

In the Former Eastern Bloc, They’re Terrified of a Trump Presidency is an interesting piece in the New Republic about people who know a thing or two about living under tyranny.

Normandy revisited

Historian Adam Tooze’s D-Day 80 Years On: World War II and the ‘Great Acceleration’ is a reflection on the wartime anniversary.

Lawful impediment

Monopoly Round-Up: The Harvey Weinstein of Antitrust is Matt Stoller’s blogpost on Joshua Wright, the lawyer who over many years had a devastating impact on antitrust enforcement in the US.

–

Explore more on these topics

–

Analysis and opinion on the week’s news and culture brought to you by the best Observer writers

More from Opinion

Rising violence against politicians is an attack on democracy itself

–

-

-

-

-

-

-

-

-

-

- –

Guardian Pick

As someone close to the technology, this sounds far more to me like an excuse to transfer tax monies to well-connected tech companies than anything to do with actual national security.

Most viewed