NVIDIA has partnered with Hippocratic AI to develop AI-powered healthcare agents. NVIDIA/Hippocratic AI –

NVIDIA has partnered with Hippocratic AI to develop AI-powered healthcare agents. NVIDIA/Hippocratic AI –

Workforce shortages are simply an imbalance between need and supply. In healthcare, this equates to not being able to provide the right number of people with the right skills in the right places to provide the right services to the right people.

The World Health Organization (WHO) has estimated a projected shortfall of 10 million health workers by 2030, mostly in low- and middle-income countries. However, the pinch produced by shortages is already being felt in rural and remote settings in high-income countries like the US and Australia. Ostensibly addressing the global healthcare staffing crisis, NVIDIA recently announced their partnership with Hippocratic AI to develop generative AI-powered ‘healthcare agents’.

“With generative AI, we have the opportunity to address some of the most pressing needs of the healthcare industry,” said Munjal Shah, cofounder and CEO of Hippocratic AI. “We can help mitigate widespread staffing shortages and increase access to high-quality care – all while improving outcomes for patients. NIVIDIA’s technology stack is critical to achieving the conversational speed and fluidity necessary for patients to naturally build an emotional connection with Hippocratic’s Generative AI Healthcare Agents.”

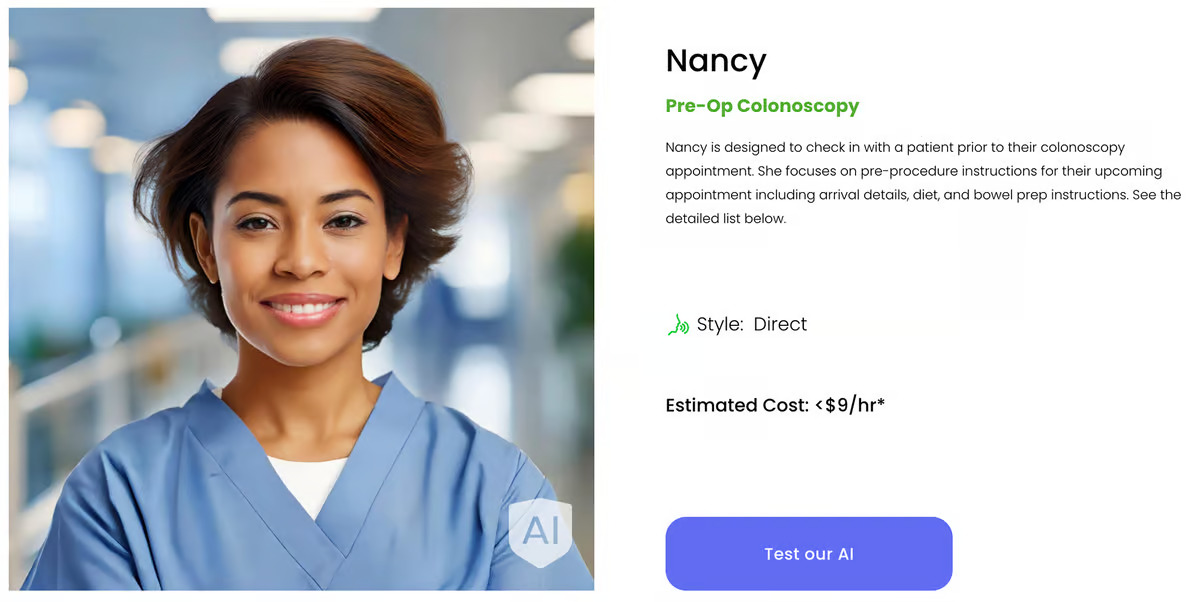

The agents build on Polaris, Hippocratic’s safety-focused large language model (LLM), the first designed for real-time patient-AI healthcare conversations. Polaris’ one-trillion parameter ‘constellation system’ combines a primary LLM agent that drives patient-friendly conversation and several specialist support agents focused on healthcare tasks performed by nurses, social workers, and nutritionists to increase safety and reduce AI hallucinations. They’re connected to NVIDIA Avatar Cloud Engine (ACE) microservers and use NVIDIA Inference Microservice, or NIM, for low-latency inferencing and speech recognition, and were unveiled at the recent NVIDIA GTC.

–

Having super-low latency is key to providing a platform that allows patients to establish a natural, responsive connection with the AI agents they’re interacting with. Hippocratic is working with NVIDIA to continue refining its tech so it can deliver that.

“Voice-based digital agents powered by generative AI can usher in an age of abundance in healthcare, but only if the technology responds to patients as a human would,” said Kimberly Powell, vice president of Healthcare at NVIDIA. “This type of engagement will require continued innovation and close collaboration with companies, such as Hippocratic AI, developing cutting-edge solutions.”

Hippocratic has already tested Polaris’ capabilities, recruiting US-licensed nurses and physicians to perform end-to-end conversational evaluations of the system by posing as patients. The company says that, compared to OpenAI’s ChatGPT-4 and LLaMA-2 70B and flesh-and-blood nurses, Polaris outperformed them all in terms of safety benchmarks. The results are available on the open-access repository arXiv.

The announcement that Hippocratic AI and NVIDIA are partnering to develop AI-powered healthcare agents elicited a wide range of comments on Reddit. Perhaps the most telling was the comment by Then_Passenger_6688: “AI will be doing the ‘human’ stuff of bedside manner, humans will be doing the ‘robot’ stuff of inserting tubes and injecting stuff.”

–

It’s important to remember that, at least at this stage, the healthcare agents are limited to talking to patients by phone or video to assist patients with things like health risk assessments, management of chronic illnesses, pre-op check-ins, and post-discharge checkups. At this stage. As we all know, AI advances at an astonishing rate.

The media has also highlighted the cost of using an AI healthcare agent compared to the cost of actual nurses. Hippocratic’s website advertises all its agents at less than US$9 per hour. By contrast, as of 2022, the US Bureau of Labor Statistics listed the estimated mean hourly wage for registered nurses as $42.80. But what does a patient get for that higher amount?

As a former registered nurse working in a hospital setting, I can say there are a lot of non-nursing duties that don’t appear in any job description: Housekeeping chores, delivering and removing meal trays, putting flowers into vases and discarding them when they’ve died, transporting non-critical patients for surgical and medical imaging procedures, connecting the TV, buying a particular newspaper or magazine for a patient, answering relatives’ questions about their loved one’s progress (usually more than once a shift), troubleshooting lights/toilets/pan-flushers that aren’t working … I could go on. Then there’s the ‘dark side’ of nursing: Talking down an agitated or angry patient or relative, and receiving verbal and/or physical abuse from any number of visitors.

–

I’m in two minds about the introduction of AI healthcare agents. Obviously, the main purpose of any valuable technology, such as AI, is to solve problems or make improvements. Do healthcare agents do this? Probably, yes. AI can improve medical record keeping and the quality of service in terms of efficiency and, potentially, safety, and simplify workload. But consider this: AI does a patient’s pre-op check-in, which removes a fairly straightforward administrative task and frees up some of the nurse’s time. However, what familiar face does the patient look for when they’re admitted to the hospital? It can’t be the AI’s. That pre-admission interaction is more than collecting information; it’s crucial to establishing rapport and trust, calming anxiety, and obtaining a holistic view of the patient.

In benchmark testing, Polaris performed slightly better than human nurses in identifying a laboratory reference range (96% versus 94%). However, all the lab results I’ve looked at include a reference range, and nurses know how and where to look for one, even if it’s not included. When assessing knowledge of a specific medication’s impact on lab results, Polaris scored 80%, while human nurses scored 63%. That’s all well and good, but it neglects the nurse’s ability to check for themselves whether a deranged lab result has been caused by medication. And this autonomy has a flow-on effect. The out-of-kilter result is communicated to the treating doctor and/or the nurse in charge, which serves two purposes: It ensures the patient’s well-being and informs the treating team of a potential issue.

My fear is that the introduction of AI-powered health agents will contribute to the compartmentalization of healthcare, particularly nursing. Medicine and surgery have already separated into specialized groups and even smaller subgroups within those groups, isolating these disciplines so that the knowledge and experience gained in one is not readily transferred to another.

By partnering with NVIDIA, it’s arguable that Hippocratic AI’s heart is in the right place.

“We’re working with NVIDIA to continue refining our technology and amplify the impact of our work of mitigating staffing shortages while enhancing access, equity, and patient outcomes,” said Shah.

Let’s hope they stay true to their motto, “Do no harm.” The motto is based on the phrase, “First, do no harm,” a dictum often attributed to Hippocrates but doesn’t appear in the original version of the Hippocratic Oath.

Source: Hippocratic AI, NVIDIA View gallery – 4 images

–

Please keep comments to less than 150 words. No abusive material or spam will be published.

Individuals in our system take (or are assigned) responsibility for their actions. Make a mistake and you can get sued for compensation. When an ‘ai agent’ makes a mistake who do you sue? The doctors office? They aren’t going to want to assume that because they didn’t make the mistake. So they’ll want to forward that liability on to the contracting software company. They won’t be able to take endless blame pay out compensation for their actions so either try to put that liability back on the doctors who will stop purchasing the system, or go out of business so the system can no longer be bought.

Where AIs might be able to be useful would be keeping in better touch with patients who have chronic conditions, who often don’t have anything serious enough to trigger an office visit but still need monitoring and potential adjustment of care.

That’s a terrible idea, then patients would see you, and think you know them, when you’ve never met them in your life. They would think they have already spoken to you about their condition, making future conversations awkward.

–